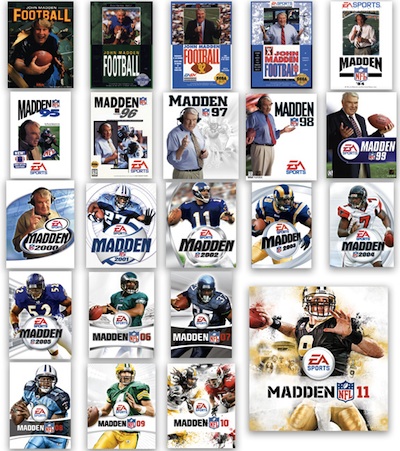

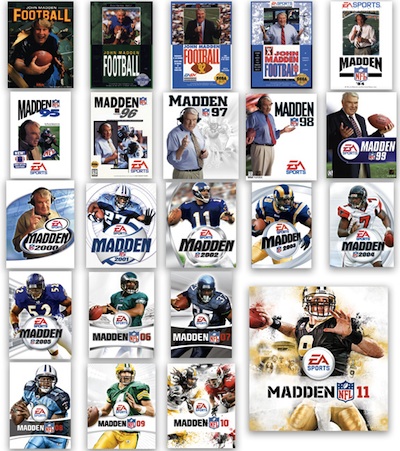

1982

Harvard grad and former Apple employee Trip Hawkins founds video game maker Electronic Arts, in part to create a football game; one year later, the company releases “One-on-One: Dr. J vs. Larry Bird,” the first game to feature licensed sports celebrities. Art imitates life.

1983-84

Hawkins approaches former Oakland Raiders coach and NFL television analyst John Madden to endorse a football game. Madden agrees, but insists on realistic game play with 22 on-screen players, a daunting technical challenge.

1988-90

EA releases the first Madden football game for the Apple II home computer; a subsequent Sega Genesis home console port blends the Apple II game’s realism with control pad-heavy, arcade-style action, becoming a smash hit.

You can measure the impact of “Madden” through its sales: as many as 2 million copies in a single week, 85 million copies since the game’s inception and more than $3 billion in total revenue. You can chart the game’s ascent, shoulder to shoulder, alongside the $20 billion-a-year video game industry, which is either co-opting Hollywood (see “Tomb Raider” and “Prince of Persia”) or topping it (opening-week gross of “Call of Duty: Modern Warfare 2”: $550 million; “The Dark Knight”: $204 million).

…

Some of the pain was financial. Just as EA brought its first games to market in 1983, the home video game industry imploded. In a two-year span, Coleco abandoned the business, Intellivision went from 1,200 employees to five and Atari infamously dumped thousands of unsold game cartridges into a New Mexico landfill. Toy retailers bailed, concluding that video games were a Cabbage Patch-style fad. Even at EA — a hot home computer startup — continued solvency was hardly assured.

…

In 1988, “John Madden Football” was released for the Apple II computer and became a modest commercial success.

…

THE STAKES WERE HIGH for a pair of upstart game makers, with a career-making opportunity and a $100,000 development contract on the line. In early 1990, Troy Lyndon and Mike Knox of San Diego-based Park Place Productions met with Hawkins to discuss building a “Madden” game for Sega’s upcoming home video game console, the Genesis. …

Because the game that made “Madden” a phenomenon wasn’t the initial Apple II release, it was the Genesis follow-up, a surprise smash spawned by an entirely different mindset. Hawkins wanted “Madden” to play out like the NFL. Equivalent stats. Similar play charts. Real football.

…

In 1990, EA had a market cap of about $60 million; three years later, that number swelled to $2 billion.

…

In 2004, EA paid the NFL a reported $300 million-plus for five years of exclusive rights to teams and players. The deal was later extended to 2013. Just like that, competing games went kaput. The franchise stands alone, triumphant, increasingly encumbered by its outsize success.

…

Hawkins left EA in the early 1990s to spearhead 3D0, an ill-fated console maker that became a doomed software house. An icy rift between the company and its founder ensued.